Lindsay is a Content Marketing Manager at Monte Carlo.

Share this article

Data quality audits are a key way to ensure your business decisions are being informed by fresh, accurate, and up-to-date data.

Data quality audits can help data teams:

It can seem like a daunting task to perform a data quality audit. But, whether your data resides in a warehouse, lake, lakehouse, or on-prem, it’s important to take the time to assess the accuracy of your organization’s data. Without routine data quality audits, your business runs the risk of making misinformed – and consequential – decisions.

A data quality audit is a step-by-step process to validate the accuracy and trustworthiness of your data. Data quality audits are meant to ensure the data fueling your business decisions is high-quality. If your data quality is lacking or inaccurate across certain points of your data pipeline, you can pinpoint, triage, and resolve those inaccuracies quickly and efficiently.

Data quality audits typically include three steps: establishing key metrics and standards, collecting and analyzing the data, and identifying and documenting data quality issues.

Before getting started with a data quality audit, it’s crucial to establish a clear understanding of the reasons for the audit and the scope of the data you plan to audit.

Defining what you aim to achieve with a data quality audit will help your team communicate the value of the audit to your business stakeholders and members of the leadership team. It will also help team members performing the audit to understand why its important for the organization.

Especially if members of your data team need to allocate their time toward performing an audit in place of their typical work, you need to ensure that the objectives and value of this decision are clearly communicated.

Your organization may want to audit your entire arsenal of data, or you may select a few datasets to audit individually. Choose the right datasets and clearly communicate to the responsible parties why the audit is being performed. Ensure you have access to the entire dataset, especially if data is distributed across multiple systems.

One of the best ways to manage data quality across your different datasets is to categorize the data in three ways:

Then, your data team can determine which of these use cases the data quality audit should focus on.

Once you’ve adequately planned your data quality audit by setting clear objectives and choosing the data you want to assess, you’re ready to begin the data quality audit process.

There are three key steps to follow when conducting a data quality audit: establishing data quality metrics and standards, collecting and analyzing the data, and identifying and documenting data quality issues.

Let’s dive into each step of the data quality audit process.

There are several metrics to consider when assessing data quality. Depending on the specific requirements of your organization, you may prioritize additional metrics, but we recommend that data teams at least start with the following data quality metrics:

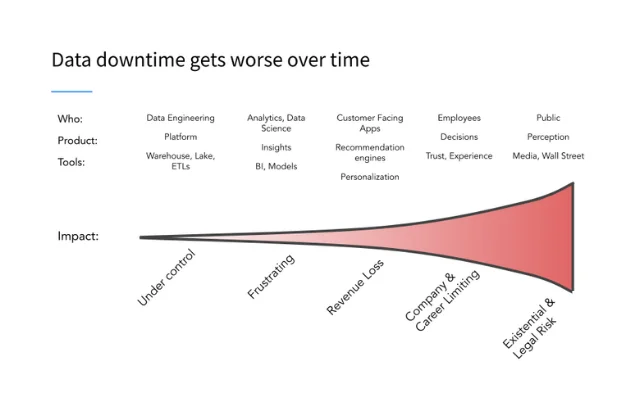

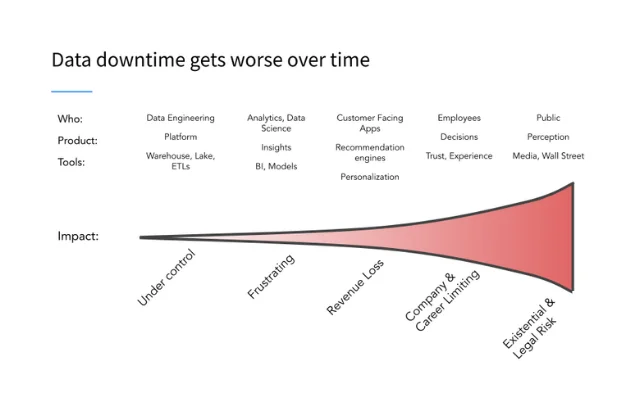

Teams typically experience at least 6 data quality incidents per table per month, and it takes, on average, 4 hours to detect those data incidents and 9 hours to resolve them. These incidents are a part of data downtime, or the period of time when data is erroneous, missing, or inaccurate. The formula for calculating data downtime looks like:

On average, data teams experience over 793 hours of data downtime per month. That’s why conducting a data quality audit is so essential. Reducing data downtime improves engineer efficiency and mitigates the risk of severe data incidents with devastating consequences.

For more data quality metrics to consider in your data quality audit, check out our cheat sheet.

Once you know which data quality metrics to measure, you’ll need to gather the data to perform your analysis.

Understand where your data is stored,

Identifying data quality issues in the data you’ve collected is key. There are many ways for data quality issues to arise, but here are some of the most common data quality issues that appear in a data quality audit:

Once you’ve pinpointed these issues, you need to ensure you record them in a clear and consistent manner. Make sure you identify where in your data lineage these issues occurred.

Consider a situation where your analytics/BI, data science, data engineering and product engineering teams are spending time on firefighting, debugging and fixing data issues rather than making progress on other priorities. This can include time wasted on communication (“Which team in the organization is responsible for fixing this issue?”), accountability (“Who owns this pipeline or dashboard?”), troubleshooting (“Which specific table or field is corrupt?”), or efficiency (“What has already been done here? Am I repeating someone else’s work?”).

Conducting a data quality audit can help remediate these issues. Identify the responsible team and the pipelines they own, perform an audit of their entire dataset, and learn where the data quality issues are arising. From there, you can not only triage and resolve the problems, but you have a clearer understanding of where the data downtime seems to be occurring, saving other teams the time and resources they had been spending firefighting and fixing issues.

Once you’ve completed your data quality audit, you need to make sure you identify the following information

This information requires a deep understanding of your data pipelines, and it can help the responsible stakeholders to address the data quality issues and start the triage process quickly and effectively.

Use the insights you gather in your data quality audit to maintain a clearer understanding of your data lineage. It’s essential to know where your data came from and where it’s going via your data pipelines to assess the blast radius of the incident, and to pinpoint the root cause to accelerate resolution.

This understanding of your data pipelines requires continuous data monitoring – and that’s where data observability can help.

A data observability tool is a great way to monitor data quality at scale. Rather than having to perform manual query tests over and over again, automated data observability helps data teams get end-to-end coverage across their entire data pipeline.

Monte Calro also offers automated machine learning data quality checks for some of the most common data quality issues—like freshness, volume, and schema changes. This helps to streamline the data quality audit process and quickly pinpoint RCA.

If your team is ready to automate the data quality audit process, talk to our team to see how data observability can take your data quality to the next level.